|

Technology--or rather, particular implementations of it--are like enzymes, dropped into our protein pool of possibilities. They lower the activation energy of various tasks. Our rate of communication is greatly increased by our mobile phones; the energy required to clean our clothes is greatly reduced by washing machines.

Lowered activation energy isn't always a good thing. How much time is idly wasted with mobile devices? How much plastic is needlessly created and cast off into the environment? Without these enzymes, would our finite human resources be more satisfyingly spent? As with biological well-being, technological health is a layered process. The enzyme suppliers must be aware of the long-term effects of their product, though the final responsibility lies with the well-informed user.

|

|

|

The Turing Test is an enduring artifact of cybernetics, computer science, and popular imagination. Although it is far from universally accepted as proof of much of anything, I'd like to briefly poke another hole or two in its premises.

The original "game" proposed by Turing involved no computers--there was one man, and one woman. A third person (a tester) was able to communicate with them only by written notes, and was tasked with determining the participants' correct genders. The twist is this: the man was instructed to trick the tester into believing he was a woman, and the woman was instructed to behave naturally. Turing then adapted the idea by replacing one participant with a a computer, leaving the tester to determine which was human. If the tester failed to correctly determine which participant was the computer, then the computer could be considered intelligent.

I have three problems with this formulation:

1) It is based on deception. In the natural world, deception is practiced by both predators and prey. Successful deception by one means the suffering or death of the other. Likewise in the human, business, social, and ethical realms, we rarely hold deception to be a virtue worth building upon. If accepted at face value, this formulation devalues human intelligence by equivocating it with deception. As a human being, I would like to see intelligence defined in more human, and less algorithmic, terms. (Or in ruthless evolutionary terms: if computers do someday achieve intelligence, we got here first, and we should define the terms in our enduring favor.)

2) It is excessively reductive. Narrowing the channel through which intelligence must be communicated to one of such tiny bandwidth (not to mention a single channel, unlike the human experience of multiple channels and senses) intentionally privileges the computer. I might as well propose that a small box which emitted a human-sounding laugh in response to funny jokes (and no sound in response to bad ones) was intelligent--surely it requires human-like intelligence to understand when jokes are funny? Not at all.

3) It conflates the signifier with the signified. Clever strings of text do not inherently indicate intelligence. We accept text-based communication because it is a sufficient signifier of something more--another human--on the other end. We accept this signifier precisely because, historically, only a human can generate it. If computers can reliably generate that signifier then it will no longer signify what it always has. Rather than prove machine intelligence, a successful Turing test will only prove the insufficiency of the very medium it uses (devaluing it in the process).

I'm no Luddite; but we need a much-improved version of the Turing test for it to have any meaning. This will require improved definitions of what intelligence really is. We must make sure that those definitions serve the humanity they come from, rather than its by-product.

"[The Turing test] does not necessarily mean that the computer has become more human-like. The other possibility is that the human has become more computer-like." --Jaron Lanier

|

|

|

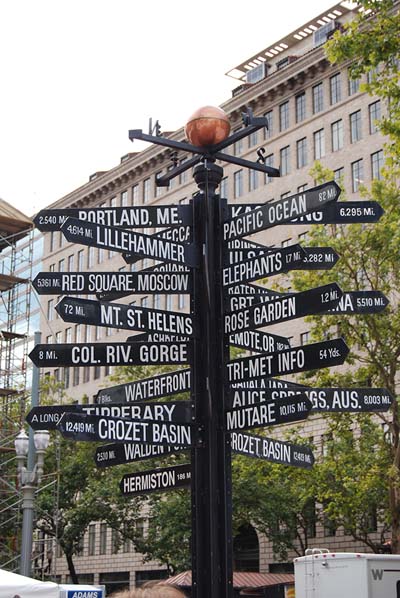

At what point does an informational tool become so overwrought that it becomes something entirely different from its base form?

A standard street sign has a known function and simple premises and affordances. It is intended to provide context within a given city. The example above, while providing some local context, is meant to set the reader within a global context. It is not "usable", but it does provide a function.

Overwrought software is nearly always frustrating. Are there cases where it begins to fill a different purpose altogether from what the designers intend? Can such products have repeat usefulness?

|

|

|

A few thoughts on Adam Greenfield's survey of the now-and-future landscape of ubiquitous computing. Concise and well-written, though not in-depth, I would add a few points to his assessment:

On Multiplicity: the more mundane aspects, such as multiple systems knowing which are being addressed, are certainly valid engineering problems. For the issue of multiple conflicting orders (or preferences) being simultaneously delivered to a single system, I also see cause for some playfulness. Who decides a room's temperature, lighting, or mood music? Depending on the participants and the forum, this could become a metagame of its own. The interaction between individuals' static preferences and the system's processing rules could lend itself to enough fun by itself: Gamers might yield control to the player with a high score; businessmen to recent sales...the list goes on. But add the ability to incorporate performance, realtime feedback, and 'dialog' between systems, and the possibilities for a bit of fun are endless.

On The Inescapability of One's Own Datatrail: Greenfield gives not even a passing mention to the ability to create multiple digital personas--just as most of us do now--which remain linked to each other only to the degree we explicitly allow. There's no reason why every ubiquitous system should recognize (and correlate) our behavior with every other system's recognition of us as an entity.

Two statements he makes that apply just as well to non-ubiquitous design:

"Everyday life presents designers of everyware with a particularly difficult case because so very much about it is tacit, unspoken, or defined with insufficient precision"

Social networking sites? PDAs? Maybe even personal finance software? The problem applies to these as well, to varyingly recognized degrees.

"How can we fully understand, let alone propose to regulate, a technology whose important consequences may only arise combinatorialy as a result of its specific placement in the world?" (emphasis added)

Ditto the above examples, and pretty much everything else in the world.

He also comments: "We will find that everyware is subtly normative, even prescriptive--and, again, this will be something that is engineered into it at a deep level." While there can be value in saying that certain specific technologies (and their implementations) are more or less normative than others, I would argue that for sweeping statements like this, that the more relevant truth is that humans are normative (and to a hopefully slightly lesser degree, prescriptive) beings.

If ubicomp allows us to monitor our blood glucose levels in realtime, then many people will monitor theirs obsessively--even moreso if they can compare it against friends, family, and coworkers. But this won't because of any presumption of the technology. The reason we don't so it right now is more likely because we can't rather than that we wouldn't want to. This line of logic leads to an entire new world of considerations, the 'tyranny of choice' and so on. But--in an ideal world--once designers have ethically designed the information architecture, access mechanisms and so forth to be morally unimpinging, their work is far from finished. Then begins the probably far more difficult job of weighing the implications of human nature, and redesigning with that in mind. But since this is not an ideal world, users will be exposed to poorly designed, ethically challenged implementations, and will have to deal, collectively and individually, with the results--just like we do today.

|

|

|

A chuckle: Tonight I was out at the local arcade (Gameworks Seattle) doing an initial survey for a small usability study. I made notes in a voice recorder rather than pen & paper, as it was more discreet. As I was walking out the frount door, preoccupied with thoughts of transcribing my notes, I (literally) ran into the quintessential Don Norman usability blunder: a nice big door with a nice big handle on which to grip and pull. The problem being that the door can't be pulled. Only pushed.

I tugged twice before laughing at myself and pushing out onto the street.

|

|

|

The Adaptive path blog has a good entry today on the retail dressing room experience. My reaction falls into two basic parts: "good point" and "how to improve".

Part one: So, so true. The bigger the retailer, the smaller the percentage of floor space needed to devote to dressing rooms, and yet the overall resource expenditure on them appears to match the floor space. Poor lighting is the number one problem, and too-small dressing rooms the second (the nicer stores will often have nice large rooms, big enough for two people to sit, change, and view clothes. Julia's point that a free stylist improves the experience is a great idea for a value-adding service.

Part two: I'll go a step further, not on services but on a 'passive' element of experience design via architecture. Ever notice how dressing rooms are almost intentionally hidden, and/or placed in the furthest back corner of a store (again, the larger the store the greater the sin here)? Current retail space design makes it clear that placement of dressing rooms is an afterthought; something to tack on after the 'real' work of designing the floor space. A savvy retailer could make the dressing room a central focus of the store and a positive social experience. Make the dressing room central to the store rather than tangential--literally central, as an island in the middle of the floor. You might even raise it a step or two to visually highlight its importance. Tap into those anthropological associations to altars and stages. Make the dressing room the place to be and be seen, rather than browsing racks on the floor.

Of course this model isn't appropriate for every retailer, but might work wonders in younger, hipper markets. Physical centrality and elevation have generally positive psychological qualities, and creating the unspoken vibe that the dressing room is where the real shopping is done would almost certainly boost sales. Sales, and customer satisfaction with the shopping experience.

|

|

|

David Levy's dissertation/book, "Love and Sex with Robots," has gathered some media attention recently. The assertion that people will be loving, and (ahem) loving, robots before too long is apparently something of a social shocker, though it should come as no surprise. People are already having sex with life-like dolls, and people already have very strong emotional attachments to man-made objects: their cars, iPods, phones, and so on (and I would argue that, psychologically, this "love" is not so different from the human kind).

The more interesting (though less sensational) questions are social. The unity that comes from the bonding of two humans is a certain thing, though there are certainly many variations on the details. But the thing that is the relationship between human and robot will almost certainly be a qualitatively different thing. While it may (or may not) be a satisfying emotional equivalent, it will allow, provide, and mandate a new set of inputs and outputs, metaphorically and literally. Creative and business partnerships, in addition to personal relationships, will have a new frontier for development in combining the relative strengths of man and machine--as, of course, technology as done for centuries. But this technology revolution is likely to be more intensely social than anything we've seen thus far.

It is easy to dismissively conceive of robots as ambulatory PDAs, but that's a problem with our vision rather that the potential of the thing. Sex is easy to predict, almost banal. It may even be a motivating force behind the development of humanoid robots. But--as always--what will make the world different won't be what people are doing in their bedrooms, but rather what they can do outside of it.

|

|

|

Is it by design, or happy coincidence that federal income taxes (in the US) are due in mid-April...and that federal elections take place in early November? What would be the effect on national politics if taxes were due on November 1st?

|

|

|

There's been a lot of speculation recently about the possibility of a Google virtual world.

Google Earth CTO Michael Jones insisted (first comment after the post) in January that Google Earth would always remain true to the real world and not dive into the type of fantasy world that Second Life has become. Therefore, Google's implementation would be more like "First Life," but in virtual form...

Instead, it makes sense for Google to mesh a bunch of its tools into one, thus creating a whole new advertising opportunity aimed at people, er, avatars, who are "walking" down virtual (real) streets to check out virtual (real) stores and businesses. And if Google wants, it could incorporate some of its more social ventures, such as social networking site Orkut and Google Talk, in order to motivate users to spend more time there.

But why even have avatars in the first place? I don't see Google trying to nudge in on the MMORPG market, not even into Second Life's quasi-game status. The potential of a serious expansion of Google Earth should be apparent--the ability to experience real places, without being there.

The difficulty is that in those real places are real people--a whole lot of them, in places where people are most likely to want to virtually 'be' (Times Square, Shibuya Crossing...). No photographic representation of those places can remove the people from them--nor would you probably want to. Real-time representation of those places would be the holy grail of virtuality, and would be possible with an array of cameras and clever interpolation technology. Personally, I'd rather inhabit (or rather, visit) those places disembodied, in a real-time crowd of the people who are actually there. A disembodied, first-person view more closely simulates the human experience than the third-person camera view common in most games.

Social networking on top of this construct could have huge potential--but is a separate consideration. The experience of presence, along with the ability to shop in the actual local retail stores, would be a novel enough lure for me and millions of others. Google won't be focusing on a 'virtual' world, but rather on the real one.

|

|

|

in a recent post, Jan Chipchase makes a worthwhile point about the "aspirational" value that items can have--value created through the interaction of appearance and certain social dynamics. This quality is not much discussed in design circles, but from a sociological perspective it is nearly omnipresent. One reason for this relative lack of design consideration may be that it is considered the proper realm of marketing. Apple, for instance, knows how to play off that aspirational need and the success of the iPod and iPhone may well owe more to PR prowess than to their renowned design aesthetic.

But should aspirational value be left to marketing, while designers focus on 'real' use? It may seem obvious to point out that aspirational use is real use, but the designer should consider further, as it has some unique qualities. Some aspirational value can be had in private (buying that gym outfit to convince yourself that you really do intend to get in shape), but much of it is public, and social. In these cases ease of use and quick response may be paramount, as complex interaction and even small delays can create social awkwardness and reduce the perception of mastery. Functionality can be nearly zero so long as the right technical and social cues are hit.

Microsoft has often tried to sell new products (or versions) almost purely on a functional basis. This may be acceptable in some established markets such as productivity software, but for novel products like the Surface table, aspirational value needs to be considered for a probably significant percentage of first-generation adopters. That means that not only should the table be "easy to use" (of course), but also that it should be easy to appear to use. If a user (restaurant owner, patron, home owner, client, student, etc.) can easily complete certain satisfying tasks in front of themselves and others, then some bumps in other functions will be tolerated with a smile.

Exactly which tasks fulfill this aspirational value is the real trick to discern. It will be different for different demographics, but will have a likely unifying thread. Creations 'wizards' won't cut it, but being able to view realtime utilities data for your home might. A moderately ostentatious display of social (or business) connections might likewise fit the bill. There are signs that MS has significantly improved its approach to design in recent years, and that bodes well. The things that the Surface can do may not be terribly new, but the way in which it does them make this a truly novel device. (Note to MS: the one thing that we should never, ever, ever see on the Surface table is a BSOD.)

|

|

|

|

|